一、安装docker

1. 下载离线包

Index of linux/static/stable/x86_64/

2. 解压

tar -xzvf docker-18.06.3-ce.tgz

(ce版本表示社区免费版,详细说明 docker带ce和不带ce的区别-Docker-PHP中文网)

3. 将解压后的文件夹复制到 /usr/local 目录

cp docker-18.06.3-ce /usr/local

4. 将docker注册为系统service

创建docker.service文件 vim /usr/lib/systemd/system/docker.service,复制下面的内容到docker.service文件,保存退出

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

#TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

# restart the docker process if it exits prematurely

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target5. 启动docker服务

systemctl start docker

6. 查看docker运行状态

systemctl status docker

7. 设置开机启动

systemctl enable docker.service

参考文章:离线部署docker_千里之行始于足下-CSDN博客_docker离线安装部署

二、docker部署hadoop

部署hadoop需先自行安装 jdk 和 ssh 软件包

1. 下载hadoop软件包

Index of /dist/hadoop/common

注:自己选择合适的版本

2. 解压离线宝

tar -xzvf hadoop-2.7.2.tar.gz

3. 将解压后的文件夹复制到 /usr/local 目录

cp hadoop-2.7.2 /usr/local

4. 配置JAVA_HOME变量

进入hadoop配置文件目录 /usr/local/hadoop/etc/hadoop,编辑hadoop-env.sh 配置文件,增加JAVA_HOME环境变量配置

export JAVA_HOME=“jdk根目录”

保存退出

5. hadoop三种运行模式

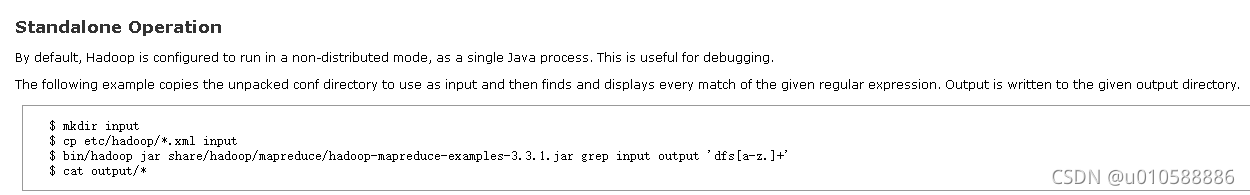

5.1 独立模式(默认配置)

默认情况下,Hadoop配置运行再非分布式模式,作为一个单独的Java进程,有助于开发调试。

此模式无需再修改配置文件,可直接执行hadoop自带的mapreduce例子测试。

$ mkdir input

$ cp etc/hadoop/*.xml input

$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.1.jar grep input output 'dfs[a-z.]+'

$ cat output/*5.2 伪分布模式

Hadoop也能够伪分布模式运行在一个single-node上面,Hadoop deamon运行在不同的Java进程。

修改配置文件

Hadoop/etc/hadoop/core-site.xml

链接:core-site.xml 详细配置参数说明

fs.defaultFS Hadoop文件系统地址

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>Hadoop/etc/hadoop/hdfs-site.xml:

链接:hdfs-site.xml 详细配置参数说明

dfs.replication 数据块副本数量为1

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>设置 SSH 免密码登录

使用以下命令检查是否已配置免密码模式

$ ssh localhost如未配置,可按照以下命令进行配置

$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

$ chmod 0600 ~/.ssh/authorized_keys启动Hadoop

1.格式化文件系统

$ bin/hdfs namenode -format2.启动 NameNode 进程和 DataNode 进程

$ sbin/start-dfs.shhadoop默认 log 输出写入路径是 Hadoop/logs 文件夹

3.通过web浏览器访问 NameNode,默认接口地址为 :http://localhost:9870

4.创建 HDFS 目录(Hadoop文件系统目录),执行MapReduce Job

$ bin/hdfs dfs -mkdir /user

$ bin/hdfs dfs -mkdir /user/<username>5.拷贝 input 到 HDFS

$ bin/hdfs dfs -mkdir input

$ bin/hdfs dfs -put etc/hadoop/*.xml input6.运行Hadoop examples

$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.1.jar grep input output 'dfs[a-z.]+'7.检查 output 目录下文件,将 output 目录从HDFS拷贝到本地文件系统进行检查

$ bin/hdfs dfs -get output output

$ cat output/*或者直接在HDFS上查看

$ bin/hdfs dfs -cat output/*8.关闭Hadoop进程

$ sbin/stop-dfs.shYarn(资源协调者) 运行在 single-node

MapReduce Job在伪分布模式下也可以通过 Yarn(资源协调者)运行任务,需要配置一些参数,还有,还需要启动 ResourceManager 和 NodeManager 进程。

1.修改配置文件

Hadoop/etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value>

</property>

</configuration>Hadoop/etc/hadoop/yarn-site.xml:

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_HOME,PATH,LANG,TZ,HADOOP_MAPRED_HOME</value>

</property>

</configuration>2.启动 ResourceManager 和 NodeManager 进程

$ sbin/start-yarn.sh3.通过web浏览器访问 ResourceManager;默认地址为:http://localhost:8088/

4.运行MapReduce Job

与Hadoop 执行 MapReduce Job 命令是一样的,参照上面

5.关闭ResourceManager 和 NodeManager 进程

$ sbin/stop-yarn.sh